Unit 4: Probability Distributions

In this set of notes, you will learn about common probability distributions.

Preparation

Before class you will need to do the following:

- Read Section 3.1.1: Probability Basics in Fox [Required Textbook]

Read Sections 3.3.1–3.3.4 (Continuous Distributions) in Fox [Required Textbook]

Refresh your knowledge about probability distributions by going though the Kahn Academy: Random Variables and Probability Distributions tutorial.

4.1 Dataset and Research Question

In this set of notes, we will not be using a specific dataset.

# Load libraries

library(broom)

library(dplyr)

library(ggplot2)

library(readr)

library(sm)

library(tidyr)4.2 Normal Distribution

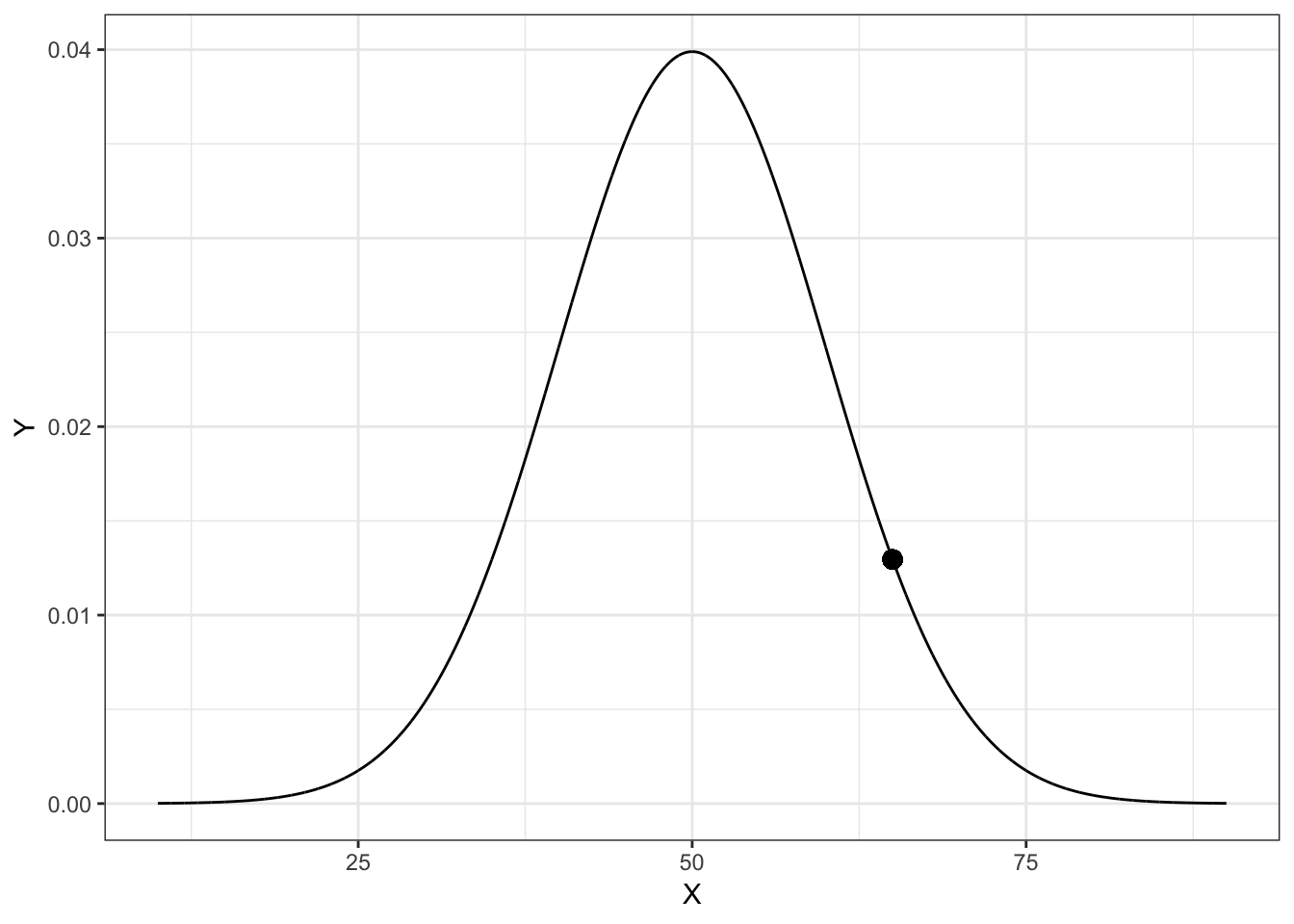

The probability distribution of a normal distribution is mathematically defined as:

\[ p(x) = \frac{1}{\sigma\sqrt{2\pi}}\exp\left[-\frac{(x-\mu)^2}{2\sigma^2}\right] \]

for \(-\infty \leq x \leq \infty\). Consider a normal distribution with a mean (\(\mu\)) of 50, and a standard deviation (\(\sigma\)) of 10. We can compute the probability density (\(p(x)\)) for a particular \(x\) value by using this equation. For example, the probability density for \(x=65\) can be found using,

\[ p(65) = \frac{1}{10\sqrt{2\pi}}\exp\left[-\frac{(65-50)^2}{2\times10^2}\right] = 0.01295176 \]

Using R, we can carry out the computation,

(1 / (10 * sqrt(2 * pi))) * exp(-(225) / 200)[1] 0.01295There is also a more direct way to compute this using the dnorm() function. This function computes the density of x from a normal distribution with a specified mean and sd.

dnorm(x = 65, mean = 50, sd = 10)[1] 0.01295If we compute the density for several \(x\) values and plot them, we get the familiar normal shape; the graphical depiction of the mathematical equation.

# Create dataset

fig_01 = data.frame(

X = seq(from = 10, to = 90, by = 0.01)

) %>%

rowwise() %>%

mutate(

Y = dnorm(x = X, mean = 50, sd = 10)

)

# Create plot

ggplot(data = fig_01, aes(x = X, y = Y)) +

geom_line() +

theme_bw() +

geom_point(x = 65, y = 0.01295176, size = 3)

Figure 4.1: Plot of the probability density function (PDF) for a Normal distribution with mean of 50 and standard deviation of 10. The density value for \(x=65\), \(p(65)= 0.01295176\), is also displayed on the PDF.

4.2.1 Other Useful R Functions for Working with Probability Distributions

There are four primary functions for working with the normal probability distribution:

dnorm(): To compute the probability density (point on the curve)pnorm(): To compute the probability (area under the PDF)qnorm(): To compute the \(x\) value given a particular probabilityrnorm(): To draw a random observation from the distribution

Each of these requires the arguments mean= and sd=. Let’s look at some of them in use.

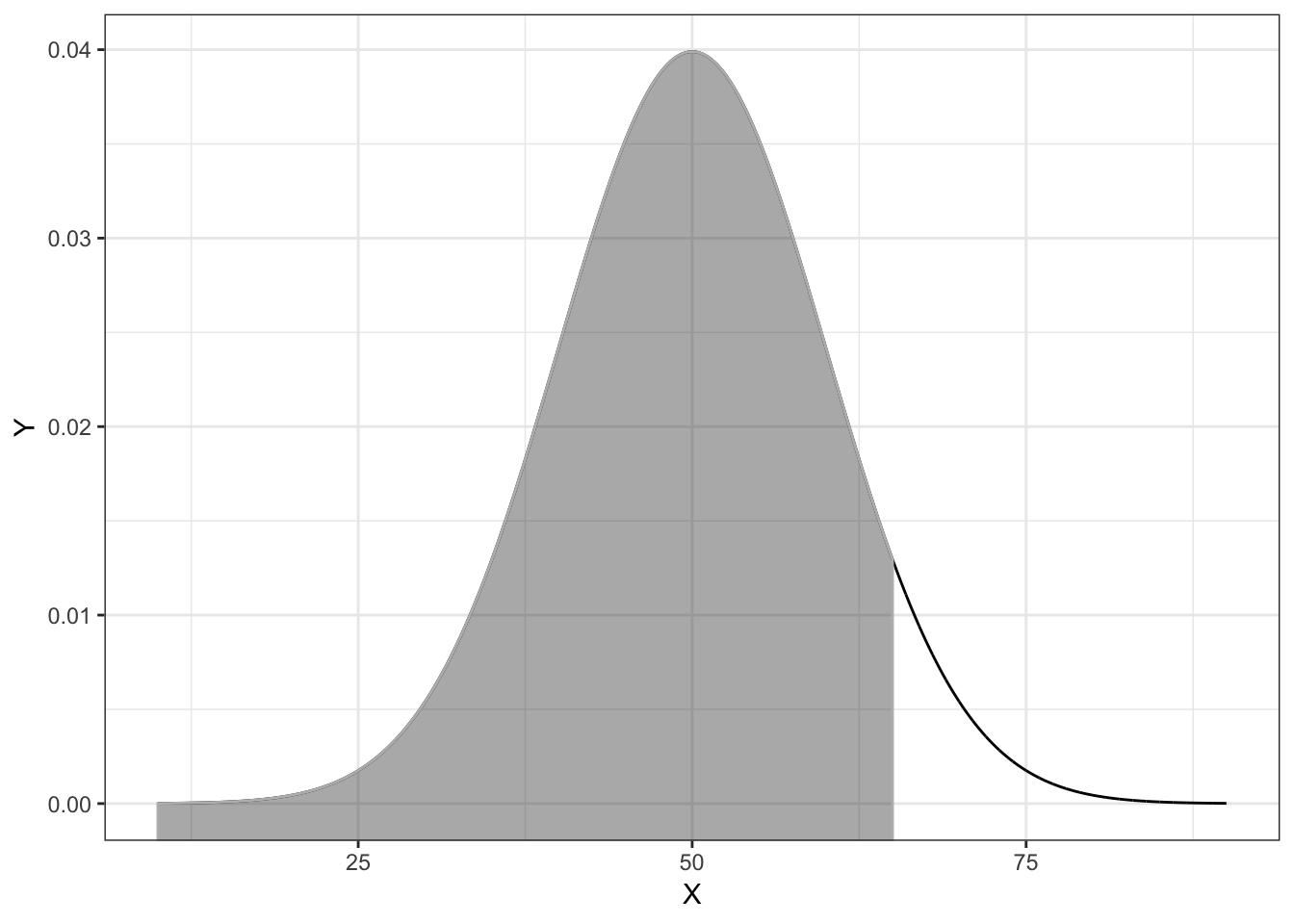

4.2.2 Finding Cumulative Probability

The function pnorm() gives the probability \(x\) is less than or equal to some quantile value in the distribution; the cumulative probability. For example, to find the probability that \(x \leq 65\) we would use,

pnorm(q = 65, mean = 50, sd = 10)[1] 0.9332This is akin to finding the proportion of the area under the normal PDF that is to the left of 65.

Figure 4.2: Plot of the PDF for a normal distribution (M=50, SD=10) with the cumulative probability for X less than or equal to 65 shaded.

For the mathematically inclined, the grey-shaded area is expressed as an integral

\[ \int_{-\infty}^{65} p(x) dx \]

where \(p(x)\) is the PDF for the normal distribution.

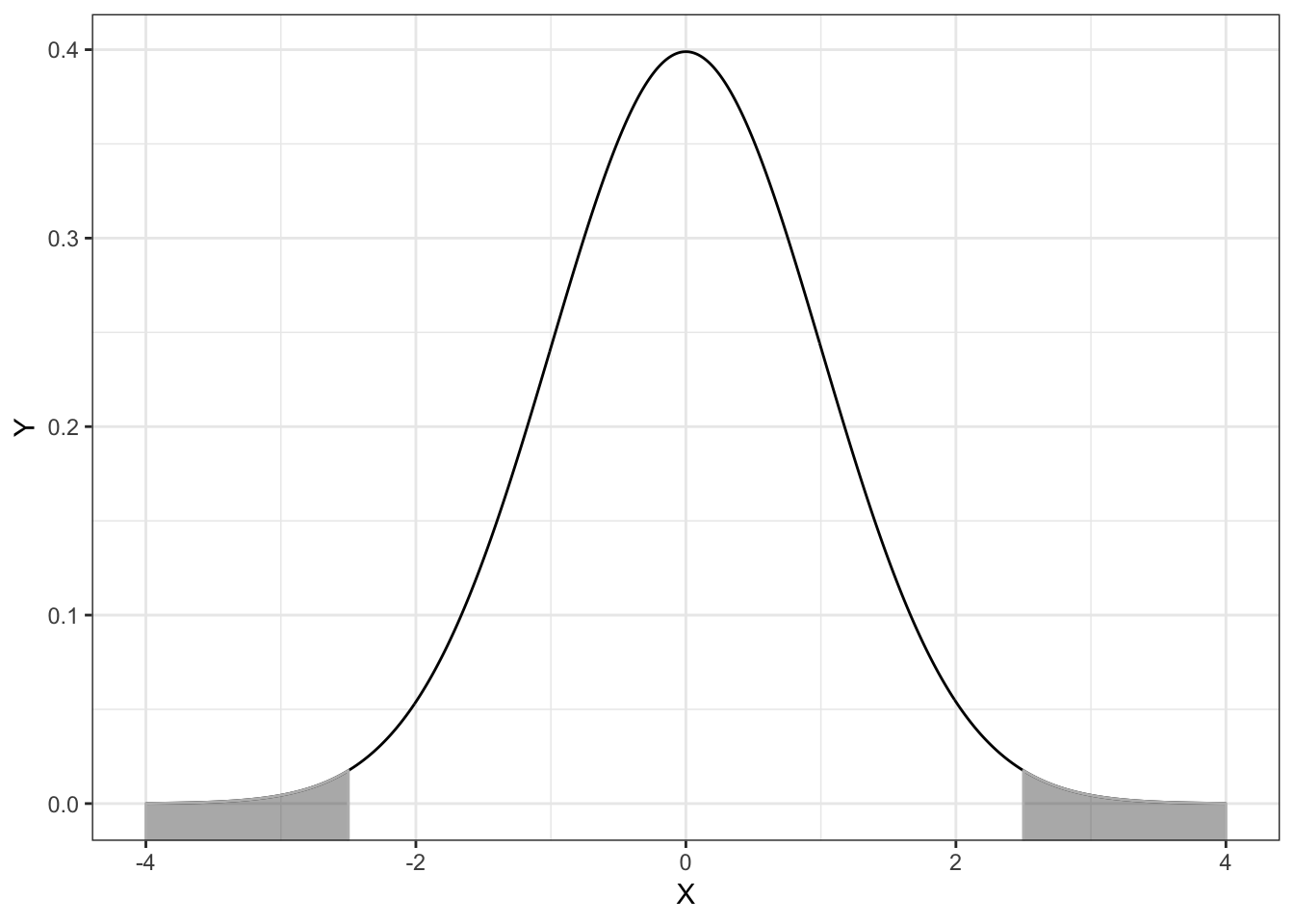

4.2.3 Cumulative Density and \(p\)-Value

This type of computation is used most commonly to find a \(p\)-value. The \(p\)-value is just the area under the distribution (curve) that is AT LEAST as extreme as some observed value. Consider a hypothesis test of whether a population parameter is equal to 0. Also consider that we observed a statistic (that has been standardized) of \(z=2.5\). Then, the \(p\)-value can be graphically displayed in the standard normal distribution as follows:

Figure 4.3: Plot of the probability density function (PDF) for the standard normal distribution (M=0, SD=1). The cumulative density representing the p-value for a two-tailed test evaluating whether mu=0 using an observed z-value of 2.5 is also displayed.

In most hypothesis tests, we test whether the parameter IS EQUAL to 0. Thus the values in the standard normal distribution more extreme than 2.5 encompass evidence against the hypothesis; those values greater than 2.5 and also those values less than \(-2.5\). (This is akin to testing a fair coin when both 8 heads OR 8 tails would provide evidence against fairness we have to consider evidence in both directions).

To compute this we use pnorm(). Remember, it computes the proportion of the area under the curve TO THE LEFT of a particular value. Here we will compute the are to the left of \(-2.5\) and then double it to produce the actual \(p\)-value.

2 * pnorm(q = -2.5, mean = 0, sd = 1)[1] 0.012424.2.4 Finding Quantiles

The qnorm() function is essentially the inverse of the pnorm() function. The p functions find the cumulative probability GIVEN a particular quantile. The q functions find the quantile GIVEN a cumulative probability. For example, in the normal distribution we defined earlier, half of the area is below the quantile value of 50 (the mean).

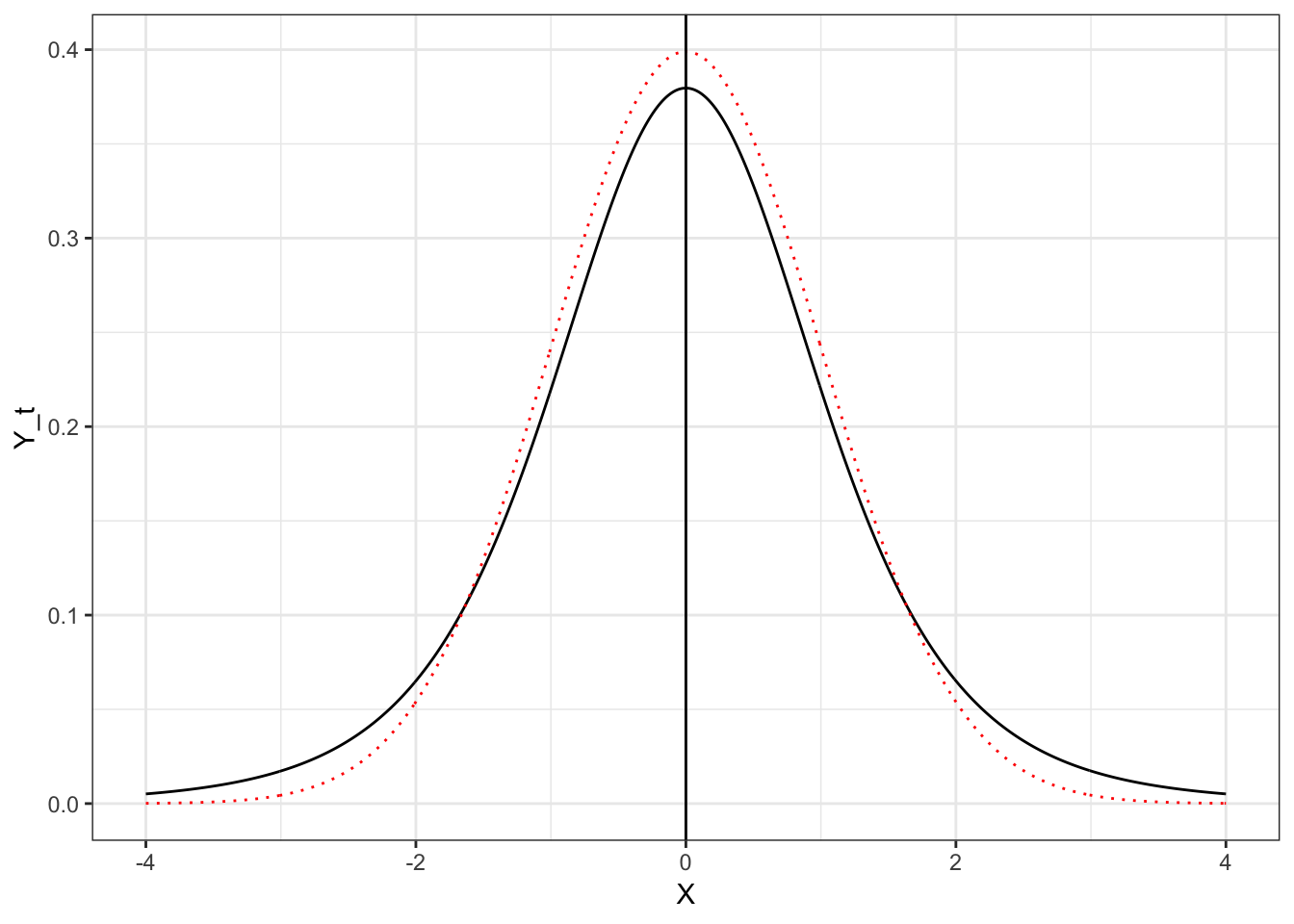

qnorm(p = 0.5, mean = 50, sd = 10)[1] 504.3 Student’s \(t\)-Distribution

Student’s \(t\)-distribution looks like a standard normal distribution. In the figure below, Student’s \(t\)-distribution is depicted with a solid, black line and the standard normal distribution (\(M=0\), \(SD=1\)) is depicted with a dotted, red line.

Figure 4.4: Plot of the probability density function (PDF) for the standard normal distribution (dotted, red line) and Student’s t-distribution with 5 degrees of freedom (solid, black line).

Both the standard normal distribution and Student’s \(t\)-distribution have a mean (expected value) of 0. The standard deviation for Student’s \(t\)-distribution is larger than the standard deviation for the standard normal distribution (\(SD>1\)). You can see this in the distribution because the tails in Student’s \(t\)-distribution are fatter (more error) than the standard normal distribution.

In practice, we often use Student’s \(t\)-distribution rather than the standard normal distribution when we are using sample data to estimate the population. This estimation increases the error and thus is typically modeled using Student’s \(t\)-distribution.

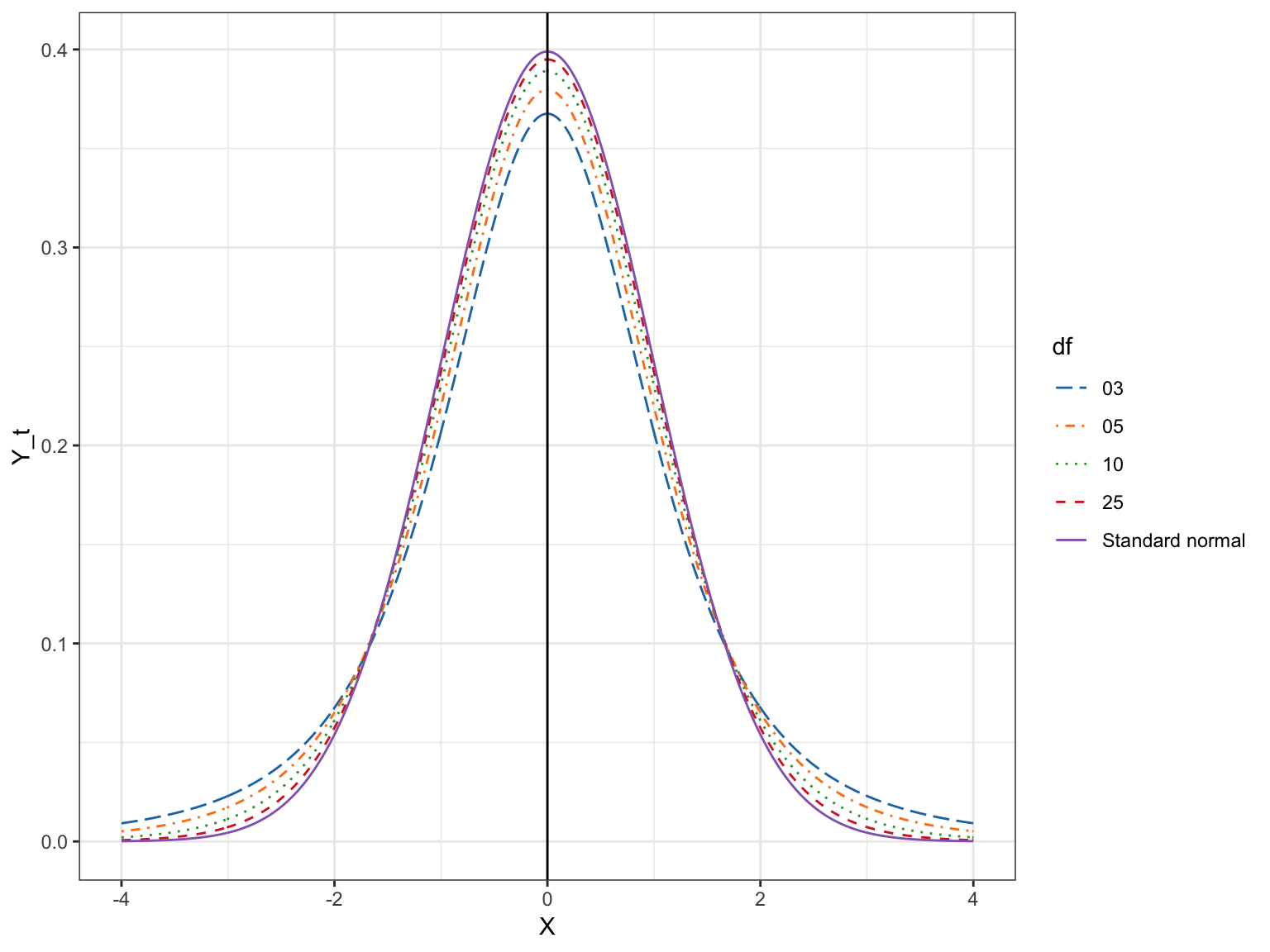

Student’s \(t\)-distribution constitutes a family of distributions—not just a single distribution. The specific shape (and thus probability density) is defined by the degrees of freedom; df. The plot below shows the standard normal distribution (purple) and four \(t\)-distributions with varying df-values.

Figure 4.5: Plot of several t-distributions with differing degrees of freedom.

df M SD

1 03 0 2.00

2 05 0 1.50

3 10 0 1.22

4 25 0 1.08

5 z 0 1.00If we compare the means and SDs for these distributions, we find that the mean for all the \(t\)-distributions is 0, same as the standard normal distribution. All \(t\)-distributions are unimodal and symmetric around zero. The SD for every \(t\)-distribution is higher than the SD for the standard normal distribution. Student \(t\)-distributions with higher df values have less variation. It turns out that the standard normal distribution is a \(t\)-distribution with \(\infty\) df. For the formula for the SD in a \(t\)-distribution, see Fox (2009).

There are four primary functions for working with Student’s \(t\)-distribution:

dt(): To compute the probability density (point on the curve)pt(): To compute the probability (area under the PDF)qt(): To compute the \(x\) value given a particular probabilityrt(): To draw a random observation from the distribution

Each of these requires the arguments df=. Let’s look at some of them in use.

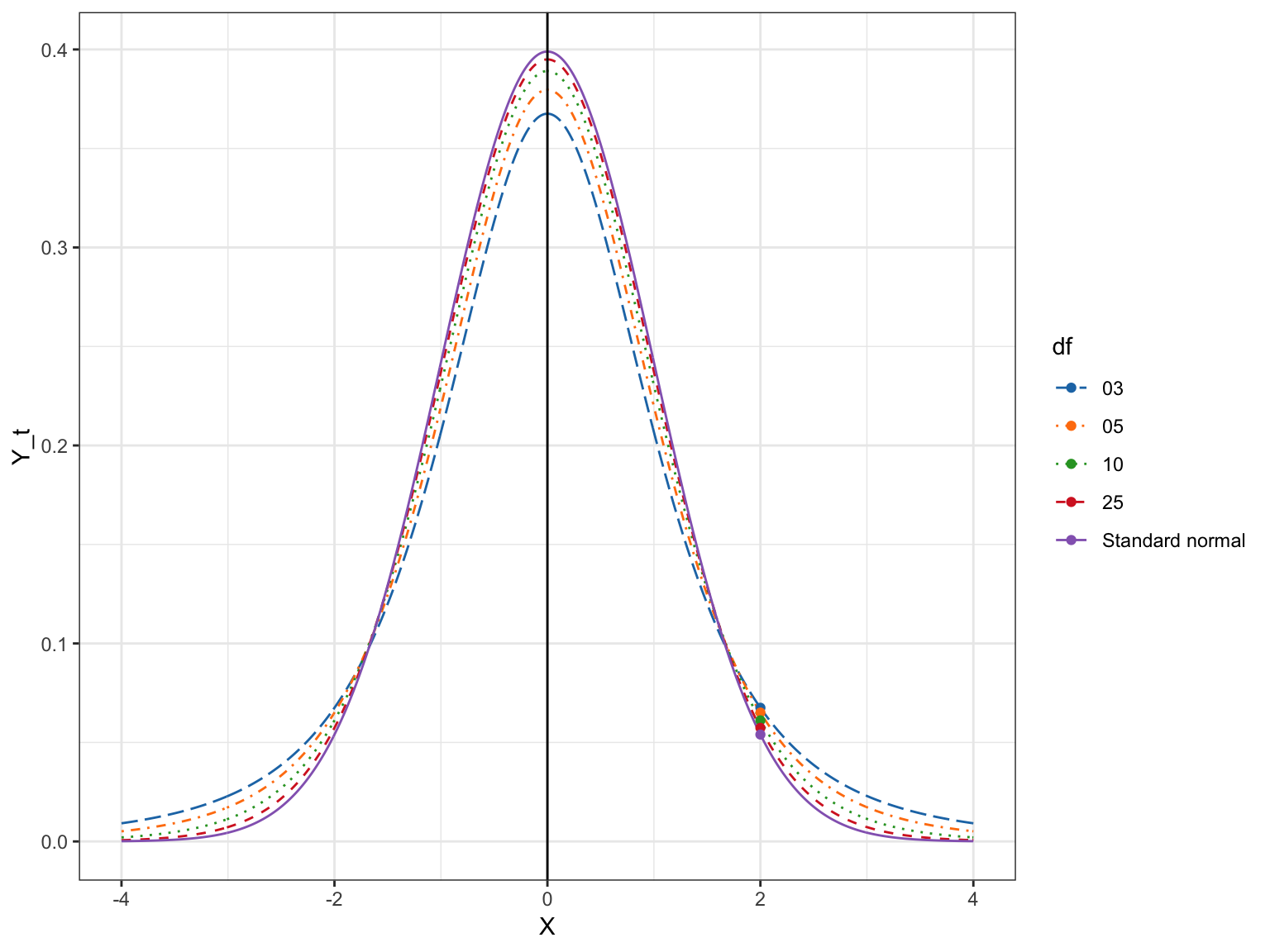

4.3.1 Comparing Probability Densities

How do the probability densities for a value of \(X\) compare across these distributions? Let’s examine the \(X\) value of 2.

# Standard normal distribution

pnorm(q = 2, mean = 0, sd = 1)[1] 0.9772# t-distribution with 3 df

pt(q = 2, df = 3)[1] 0.9303# t-distribution with 5 df

pt(q = 2, df = 5)[1] 0.949# t-distribution with 10 df

pt(q = 2, df = 10)[1] 0.9633# t-distribution with 25 df

pt(q = 2, df = 25)[1] 0.9718We are essentially comparing the height of these distributions at \(X=2\).

Figure 4.6: Plot of several t-distributions with differing *degrees of freedom. The probability density for t=2 is also displayed for each of the distributions.

4.3.2 Comparing Cumulative Densities

What if we wanted to look at cumulative density? Consider out hypothesis test of whether a population parameter is equal to 0. Also consider that we observed a statistic (that has been standardized) of 2.5 using a sample size of \(n=15\).

If we can assume that the SAMPLING DISTRIBUTION is normally-distributed then we can use the cumulative density in a normal distribution to compute a \(p\)-value:

2 * pnorm(q = -2.5, mean = 0, sd = 1)[1] 0.01242If, however, the SAMPLING DISTRIBUTION is \(t\)-distributed then we need to use the cumulative density for a \(t\)-distribution with the appropriate df to compute a \(p\)-value. For example if we use \(df=n-1\), the two-tailed \(p\)-value would be:

2 * pt(q = -2.5, df = 14)[1] 0.02547The \(p\)-value using the \(t\)-distribution is larger than the \(p\)-value computed based on the standard normal distribution. This is again because of the increased error (uncertainty) we are introducing when we estimate from sample. This added uncertainty makes it harder for us to reject a hypothesis.

4.4 Using the \(t\)-Distribution in Regression

To illustrate how probability distributions are used in practice, we will will use the riverview.csv (see the data codebook here) and fit a regression model that uses education level and seniority to predict variation in employee income.

# Read in data

city = read_csv(file = "~/Documents/github/epsy-8252/data/riverview.csv")

head(city)# A tibble: 6 x 6

education income seniority gender male party

<dbl> <dbl> <dbl> <chr> <dbl> <chr>

1 8 37449 7 male 1 Democrat

2 8 26430 9 female 0 Independent

3 10 47034 14 male 1 Democrat

4 10 34182 16 female 0 Independent

5 10 25479 1 female 0 Republican

6 12 46488 11 female 0 Democrat # Fit regression model

lm.1 = lm(income ~ 1 + education + seniority, data = city)

# Coefficient-level output

tidy(lm.1)# A tibble: 3 x 5

term estimate std.error statistic p.value

<chr> <dbl> <dbl> <dbl> <dbl>

1 (Intercept) 6769. 5373. 1.26 0.218

2 education 2252. 335. 6.73 0.000000220

3 seniority 739. 210. 3.52 0.00146 How do we obtain the \(p\)-value for each of the coefficients? Recall that the coefficients and SEs for the coefficients are computed directly from the raw data. Then we can compute a test-statistic by dividing the coefficient estimate by the SE. For example, to compute the test-statistic associated with education level:

\[ t = \frac{2252}{335} = 6.72 \]

Since we are estimating the SE using sample data, our test statistic is likely \(t\)-distributed. Which value should we use for df? Well, for that, statistical theory tells us that we should use the error df value. In our data,

\[ \begin{split} n &= 32 \\ \mathrm{Total~df} &= 32-1 = 31\\ \mathrm{Model~df} &= 2~\mathrm{(two~predictors)} \\ \mathrm{Error~df} &= 31-2 = 29 \end{split} \]

Using the \(t\)-distribution with 29 df,

2 * pt(q = -6.72, df = 29)[1] 0.0000002257For seniority (and the intercept), we would use the same \(t\)-distribution, but our test statistic would differ:

\[ \begin{split} t_{\mathrm{Intercept}} &= \frac{6769}{5373} = 1.26 \\ t_{\mathrm{Seniority}} &= \frac{739}{210} = 3.52 \\ \end{split} \]

The associated \(p\)-values are:

# Intercept p-value

2 * pt(q = -1.26, df = 29)[1] 0.2177# Seniority p-value

2 * pt(q = -3.52, df = 29)[1] 0.0014464.5 Model-Level Inference: The \(F\)-Distribution

The model-level inference for regression is based on an \(F\)-statistic, which is a standardized measure of \(R^2\).

# Model-level output

glance(lm.1)# A tibble: 1 x 11

r.squared adj.r.squared sigma statistic p.value df logLik AIC BIC

<dbl> <dbl> <dbl> <dbl> <dbl> <int> <dbl> <dbl> <dbl>

1 0.742 0.724 7646. 41.7 2.98e-9 3 -330. 668. 674.

# … with 2 more variables: deviance <dbl>, df.residual <int>In this example, the sample \(R^2\) value is 0.742. Computation of the \(F\)-statistic relies on two df values—the model degrees of freedom (2) and the error degrees of freedom (29). To compute the \(F\)-statistics from \(R^2\) we use:

\[ F = \frac{R^2}{1-R^2} \times \frac{\mathrm{df}_{\mathrm{Error}}}{\mathrm{df}_{\mathrm{Model}}} \]

In our example, we compute \(F\) as:

\[ \begin{split} F &= \frac{0.742}{1-0.742} \times \frac{29}{2} \\ &= 41.7 \end{split} \]

We write this standardization of \(R^2\) as \(F(2,29)=41.7\).

4.5.1 Testing the Model-Level Null Hypothesis

It is often worth testing whether the model explains a statistically significant amount of variation in the population. To do this we test the null hypothesis:

\[ H_0:\rho^2 = 0 \]

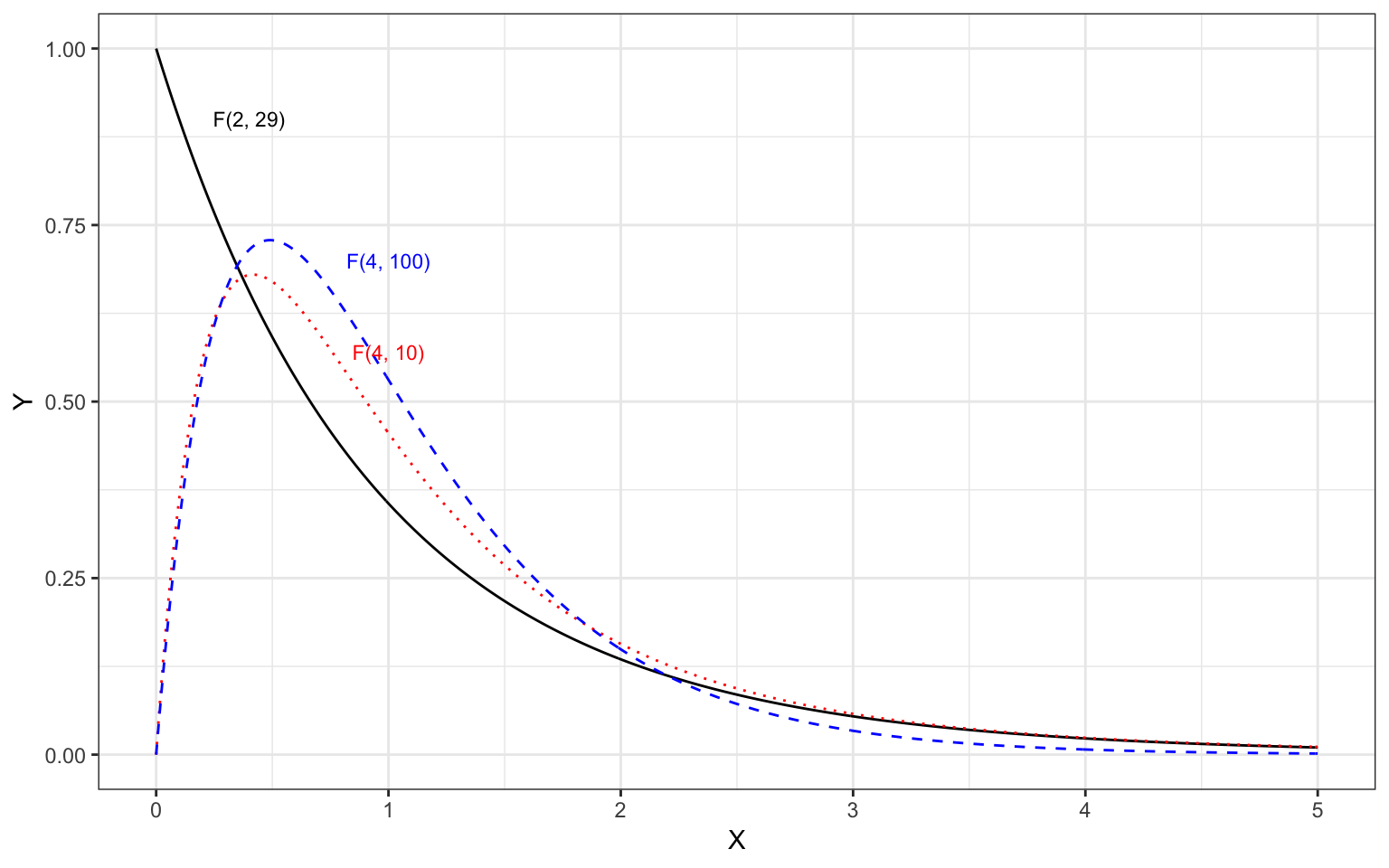

Similar to the tests of the coefficients, we evaluate our test statistic (\(F\) in this case) in the appropriate test distribution, in this case an \(F\)-distribution with 2 and 29 degrees of freedom. (The shape of the \(F\)-distribution is based on two df values.) The figure below, shows the \(F(2,29)\)-distribution as a solid, black line.

Figure 4.7: Plot of several F-distributions with differing degrees of freedom. The F(2,29)-distribution is shown as a solid, black line.

The \(F\)-distribution, like the \(t\)-distribution is a family of distributions. They are positively skewed and generally have a lower-limit of 0. Because of this, when we use the \(F\)-distribution to compute a \(p\)-value, we only compute the cumulative density GREATER THAN OR EQUAL TO the value of the standardized test statistic.

4.5.1.1 Computing F from the ANOVA Partitioning

We can also compute the model-level \(F\)-statistic using the partitioning of variation from the ANOVA table.

anova(lm.1)Analysis of Variance Table

Response: income

Df Sum Sq Mean Sq F value Pr(>F)

education 1 4147330492 4147330492 70.9 0.0000000028 ***

seniority 1 722883649 722883649 12.4 0.0015 **

Residuals 29 1695313285 58459079

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1The \(F\)-statistic is a ratio of the mean square for the model and the mean square for the error. To compute a mean square we use the general computation

\[ \mathrm{MS} = \frac{\mathrm{SS}}{\mathrm{df}} \]

The model includes both the education and seniority predictor, so we combine the SS and df. The MS model is:

\[ \begin{split} \mathrm{MS}_{\mathrm{Model}} &= \frac{\mathrm{SS}_{\mathrm{Model}}}{\mathrm{df}_{\mathrm{Model}}} \\ &= \frac{4147330492 + 722883649}{1 + 1} \\ &= \frac{4870214141}{2} \\ &= 2435107070 \end{split} \]

The MS error is:

\[ \begin{split} \mathrm{MS}_{\mathrm{Error}} &= \frac{\mathrm{SS}_{\mathrm{Error}}}{\mathrm{df}_{\mathrm{Error}}} \\ &= \frac{1695313285 }{29} \\ &= 58459079 \end{split} \]

Then, we compute the \(F\)-statistic by computing the ratio of these two mean squares.

\[ \begin{split} F &= \frac{\mathrm{MS}_{\mathrm{Model}}}{\mathrm{MS}_{\mathrm{Error}}} \\ &= \frac{2435107070}{58459079} \\ &= 41.7 \end{split} \]

This is the observed \(F\)-statistic for the model. Note that this is an identical computation (although reframed) as the initial computation for \(F\).

$$ \begin{split} F &= \[1em] &= \[1em] &= \[1em] &= \[1em]

&= _{} \[1em] &= \end{split} $$

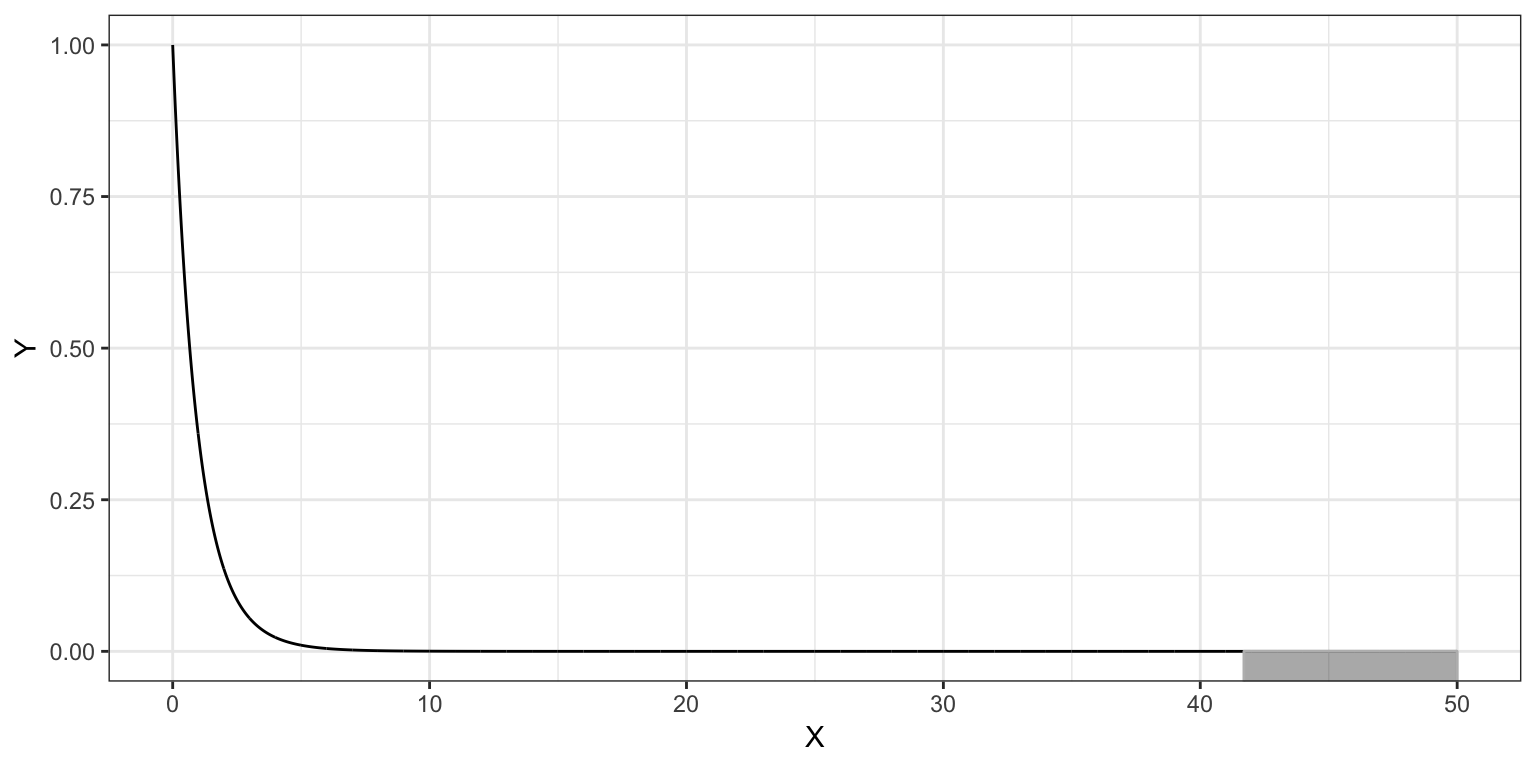

To test the null hypothesis, \(H_0:\rho^2=0\), we evaluate this observed \(F\)-statistic in an \(F\)-distribution with the 2 and 29 degrees of freedom.

Figure 4.8: Plot of the probability density function (PDF) for the F-distribution with 2 and 29 degrees of freedom. The cumulative density representing the p-value for a two-tailed test evaluating whether rho-squared=0 using an observed F-statistic of 41.7 is also displayed.

The computation using the cumulative density function, pf(), to obtain the \(p\)-value is:

1 - pf(41.7, df1 = 2, df2 = 29)[1] 0.0000000029424.6 Mean Squares are Variance Estimates

Mean squares are estimates of the variance. Consider the computational formula for the sample variance,

\[ \hat{\sigma}^2 = \frac{\sum(Y - \bar{Y})^2}{n-1} \]

This is the total sum of squares divided by the total df. When we compute an \(F\)-statistic, we are finding the ratio of two different variance estimates—one based on the model (explained variance) and one based on the error (unexplained variance). Under the null hypothesis that \(\rho^2 = 0\), we are assuming that all the variance is unexplained. In that case, our \(F\)-statistic would be close to zero. When the model explains a significant amount of variation, the numerator gets larger relative to the denominator and the \(F\)-value is larger.

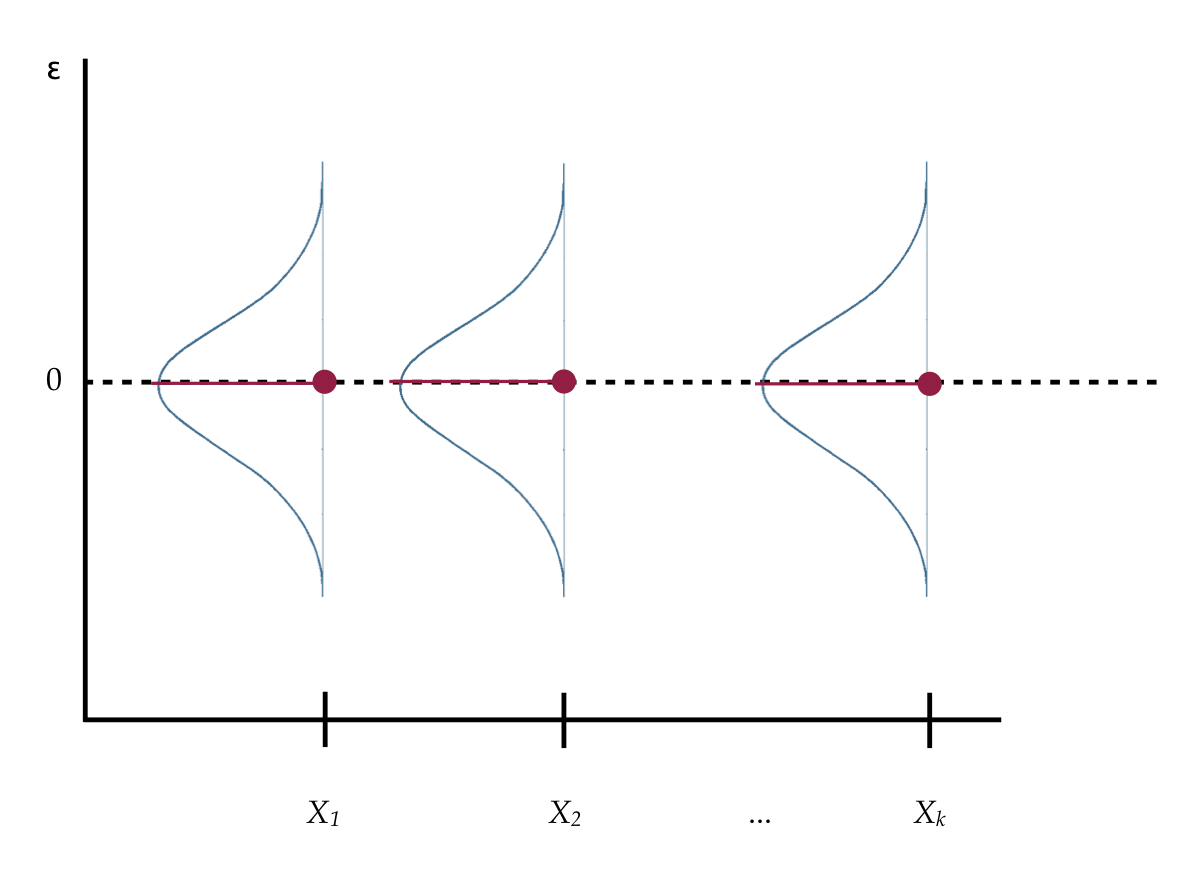

The mean squared error (from the anova() output) plays a special role in regression analysis. It is the variance estimate for the conditional distributions of the residuals in our visual depiction of the distributional assumptions of the residuals underlying linear regression.

Figure 4.9: Visual depiction of the distributional assumptions of the residuals underlying linear regression.

Recall that we made implicit assumptions about the conditional distributions of the residuals, namely that they were identically and normally distributed with a mean of zero and some variance. Based on the estimate of the mean squared error, the variance of each of these distributions is 58,459,079.

While the variance is a mathematical convenience, the standard deviation is a better descriptor of the variation in these distributions. The standard deviation is 7646.

sqrt(58459079)[1] 7646We can also obtain this value from the model-level regression output. Here it is typically referred to as the Root Mean Squared Error (RMSE). In the glance() output this value is in the sigma column.

glance(lm.1)# A tibble: 1 x 11

r.squared adj.r.squared sigma statistic p.value df logLik AIC BIC

<dbl> <dbl> <dbl> <dbl> <dbl> <int> <dbl> <dbl> <dbl>

1 0.742 0.724 7646. 41.7 2.98e-9 3 -330. 668. 674.

# … with 2 more variables: deviance <dbl>, df.residual <int>Why is this value important? It gives the expected variation in the distribution. For example, since all of the conditional distributions of the residuals are normally distributed, we would expect that 95% of the residuals would fall between \(\pm2\) standard errors from 0; or, in this case, between \(-15292\) and 15292. Observations with residuals that are more extreme may be regression outliers.

References

Fox, J. (2009). A mathematical primer for social statistics. Thousand Oaks, CA: Sage.